Purpose: To propose a new model of UX Design System at scale

Audience: Design leaders, technologists, academics

Foreword

This paper doesn’t just propose solutions — it proposes a path. Some ideas are immediately feasible; others demand a leap in how we design for accessibility, behavior, and human context. Together, they mark a trajectory: from scalable systems to sensitive systems — from responsive to truly generative.

While this paper proposes a conceptual model, it acknowledges that implementing GDS at scale requires ongoing research into privacy, bias mitigation, and inclusive governance. The solutions outlined here are preliminary directions, not definitive answers.

Disclaimer

The concepts presented in this document include both current practices and speculative ideas about the future of Agentic Design Systems (ADS). While some methods described are achievable with existing technologies, others are forward-looking proposals intended to inspire discussion and further research. This work is shared as an evolving framework rather than a definitive guide, and it does not claim that all described capabilities have been validated in production environments. Readers are encouraged to interpret this document as a contribution to ongoing exploration in design innovation.

- Introduction

“What if your design system could evolve in real-time — just like your users do?”

Imagine an ecosystem that lives, breathes, and grows. One that can sense, respond, and reimagine the digital experience — without the user lifting a finger. Powered by a neural, predictive learning model, this system adapts naturally to user behavior, delivering ultra-light software, seamless UI presentations, and a deep emotional bond between user and interface.

Sounds futuristic? It’s already happening.

Welcome to the new era of UX — where we shift from transition to transformation, from progressive to predictive, and from responsive to regenerative.

Welcome to Agentic Design Systems (ADS): an intelligent, adaptive framework that evolves based on behavioral insights and predictive modeling.

2. Research Objective and Purpose Statement

This paper aims to explore the feasibility and implications of Agentic Design Systems (ADS) as an evolution of traditional design frameworks. It investigates how ADS can address known limitations in personalization, scalability, accessibility, and cognitive load in digital experiences.

Research Question:

How can ADS redefine the foundational paradigms of user experience design to create adaptive, self-evolving interfaces at scale?

3. Related Work and Context

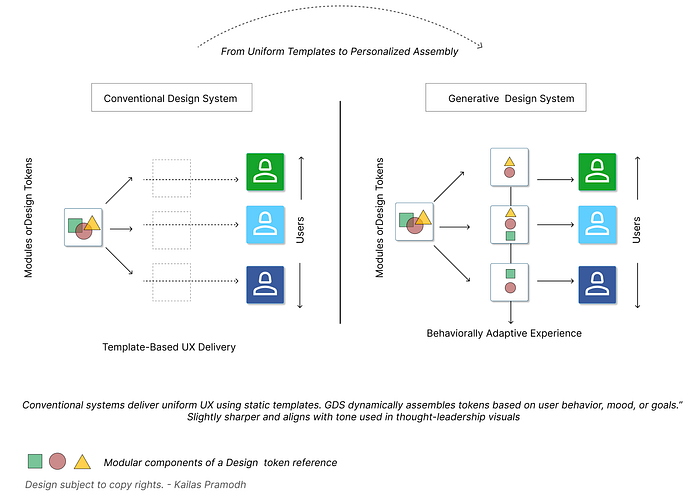

Traditional design systems — such as Google Material Design, IBM Carbon, and Salesforce Lightning — have offered robust modular frameworks emphasizing consistency and scalability. However, their evolution has primarily focused on uniformity rather than adaptive, user-specific experience orchestration. Meanwhile, advances in personalization engines (Adobe Sensei, Evolv AI, Algolia Recommend) have shown potential for tailoring experiences, but typically remain confined to surface-level recommendations rather than structural adaptation. ADS situates itself at the convergence of these domains — bridging modular consistency with dynamic responsiveness.

4. Why Traditional Design Systems Fall Short

Existing design systems are evolving, intelligent, and yes — contextual. Yet, they are often driven by preset notions, shaped by focus-driven insights and outcomes derived from research data that is timestamped — making it subject to mindset shifts and technological influences as we progress.

How does this impact visual flow and user experience?

User flows and journeys operate like wagons on railway tracks. Each wagon represents a journey, and users navigate based on these predefined paths. But while the users vary, the rails remain static — meaning the flow and experience are fixed based on user groups defined by extracted research and historical outcomes. New tracks are only laid when new journeys are identified — often reactively.

Of course, exceptions are often under-treated. But what if some wagons require a narrower gauge, while others demand a broader one? Can the rails adapt mid-journey, or are they locked into a singular specification?

This is where traditional design systems begin to derail — they are built for the average, not the exception.

Accessibility and personalization become casualties, often overlooked in the conventional design thinking process where edge cases are labeled as “outliers” instead of opportunities for inclusion.

In a world that thrives on diversity, a system that can’t flex will eventually cripple the experience — and the design system itself.

5. What Is a Agentic Design System (ADS)?

“A ADS is a self-learning design framework that continuously evolves in response to user signals — generating experiences that adjust seamlessly to individual needs.”

The three core principles of ADS

5.1 Behavior-Driven Learning : Old: WYSWYG, New: WYUWYS. What you use is what you see..! An experience that redefines Deep learning through mental model mapping. A design experience that transforms user profile into user experience. The more you use the better the system learns and executes. No more mess, just features that you use and not what has been provided. Although there are apples in the basket you pick what your eyes feel is the best. Just as users visually select the most appealing option from a basket, ADS narrows design choices based on usage frequency — delivering only what’s contextually relevant..

5.2 Context-Aware Adaptation: Do you like apples or Oranges? If the data collected states “people like fruits” doesn’t mean you like oranges. If you like to use your biometrics to log into a bank account, doesn’t mean the other user has a Biometric enabled system. Let the system understand the limitations and adapt to reduce the clutter.. ADS is organic. It lets the system redesign the experience.

5.3 Reinforced Evolution: Our fingers have unique prints. So are the users. Two users although use the same application, their styles differ. Some may be instructed to use it in a linear way while another explore shortcuts. ADS adapts to the users reroutes the flow, ensures both the users reach the same destination although they prefer different approaches. ADS reduces ambiguity and operates as a learning system that refines user journeys and enhances experiences continuously.

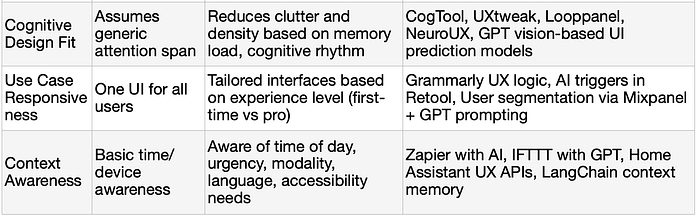

Real-world examples like Netflix’s personalized UI recommendations or Spotify’s Discover Weekly playlists demonstrate how systems adapt based on individual usage patterns. While these are not full ADS implementations, they reflect the underlying ethos of behavioral-driven learning and modular adaptation.

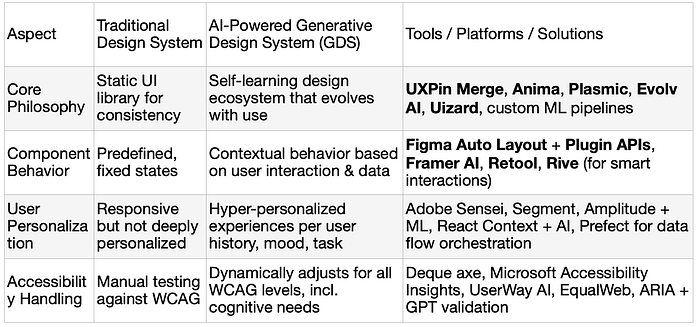

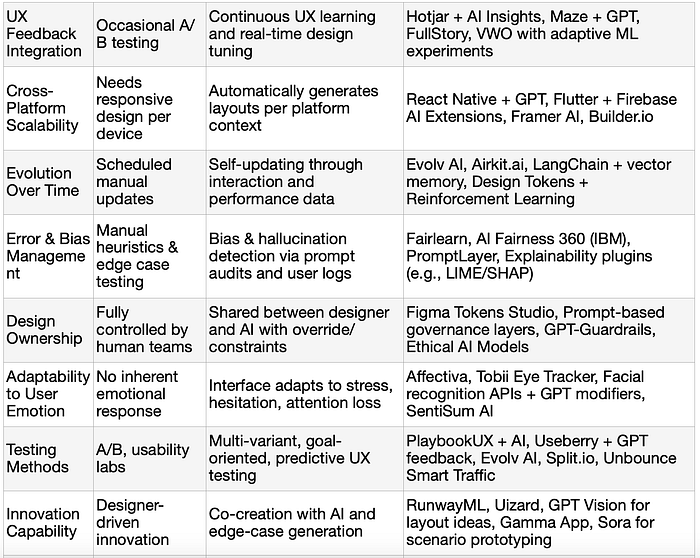

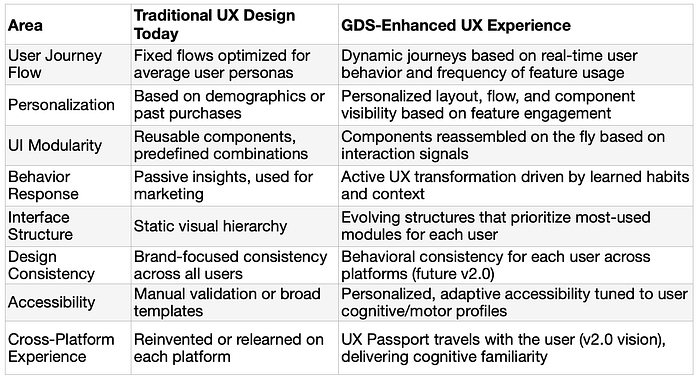

6. Comparison Table

While this paper presents a conceptual framework, it also outlines a practical architecture combining:

- Behavioral data pipelines (Segment, Mixpanel)

- Adaptive component libraries (React, Vue, design tokens)

- AI orchestration layers (LangChain, GPT-powered prompt engines)

- Accessibility engines (Deque axe, EqualWeb)

- Privacy controls (GDPR-compliant consent flows)

Future empirical studies are recommended to validate ADS implementations across metrics such as task success rates, time-on-task reduction, and accessibility compliance improvements.

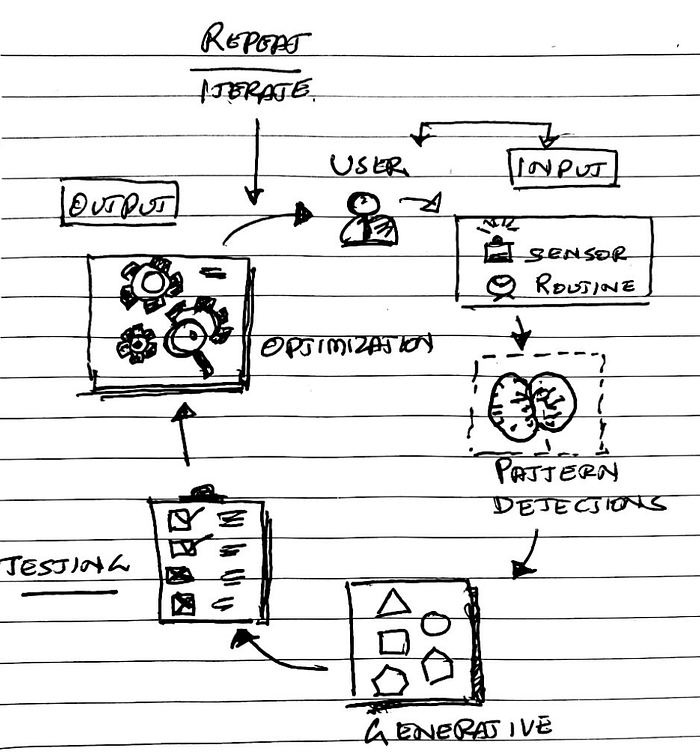

8. How ADS Works — Technologies involved:

- AI/ML Models (for behavior prediction)

Purpose: Transform static interfaces into living experiences — driven by on-demand personalization and predictive models that intuitively adjust the design in response to each user’s behavior and intent.

Example:

Imagine 500 evening walkers using an AQI (Air Quality Index) app across smart devices. The content intelligently cross-communicates between mobile phones and wearables, adapting based on the user’s real-time location and sensitivity to pollution.

- Users farther away from a polluted zone continue to receive updates on their mobile app.

- Those in close proximity receive tactile feedback — such as vibrations or visual alerts — on their wearables, triggering precautionary messages when nearing high-risk areas.

- For users at higher risk, the system proactively sends early warnings before an alert is even configured, thanks to a predictive behavior model that learns from historical patterns and user interactions.

This ecosystem showcases how a Generative Design System can respond with context-aware, personalized interventions, blending real-time data, device capability, and learned behavior to elevate user safety and experience.

“A living design system isn’t just reactive — it anticipates, adapts, and evolves with its users.”

Leading platforms already leverage fragments of this loop. For example, Google Search results and YouTube recommendations are dynamically influenced by user signals — search behavior, watch time, click-through rates. ADS proposes applying this adaptive intelligence not just to content, but to interface structure, flow, and interaction design.

Possible Technologies Mentioned:

- TensorFlow, PyTorch (for model training)

- User analytics tools integrated with ML pipelines

- Personalization engines (e.g., Adobe Sensei, custom LLMs)

Design Tokens

Purpose: Enterprise applications demand visual consistency that is both scalable and stable — a challenge traditionally solved by investing heavily in design systems built on Atomic Design principles. Design tokens accelerate this effort by abstracting core visual attributes — such as color, spacing, typography, and elevation — into reusable, platform-agnostic variables.

These tokens act as the DNA of design systems, powering components that are brand-aligned, accessible, and performance-optimized. In conventional atomic design, interfaces are assembled linearly — from atoms to molecules to organisms — based on fixed proximity relationships (e.g., a label with a button, then grouped into a form).

But Agentic Design Systems (ADS) break this linear mold. Instead of following predefined author-driven hierarchies, ADS can decouple and dynamically regenerate components by recombining available tokens and atoms in response to real-time needs. Think of it as a system that doesn’t just build upward — it rebuilds outward, forming new molecular groupings based on context, not just composition rules.

This non-linear, intent-aware assembly model empowers interfaces to adapt, reform, or evolve without starting from scratch — aligning perfectly with LLM-powered systems that understand design semantics, user goals, and functional intent. Design tokens, in this vision, aren’t just static definitions — they are adaptive units of meaning, fueling dynamic, intelligent UI construction.

“From Linear Assembly to Adaptive Regeneration: The Evolution from Atomic to Generative Design”

9. ADS in Action: Use Cases “UX Trends and Industry parallels”

10. Accessibility: Empathy Engineered Through Agentic Systems

Picture this:

As a user gazes at the screen, the system — with explicit user consent and if device permissions allow — activates sensory inputs such as the camera to read micro-indicators of eye fatigue or visual stress. Upon detecting strain, the font size auto-adjusts in real time to enhance readability — WOW is not an exaggeration here, it’s a shift toward empathetic UX.

The same intelligence extends to color. ADS evaluates saturation and contrast dynamically. If the designer prioritizes brand aesthetics over accessibility, the system respectfully alerts them with optimal alternatives. But it doesn’t stop there — ADS, driven by user-first logic, can autonomously recalibrate visuals at the user’s end (again, only if permitted) to ensure legibility and comfort aren’t compromised.

Note: These adjustments are context-aware, non-invasive, and always framed as user-centric recommendations — not enforced controls. ADS adapts, but never overrides without trust.

Now envision this:

A user with muscle tremors struggles to click a button but hovers near it. ADS detects proximity, understands intent, and initiates a voice prompt:

“Do you want to continue?”

The user replies, “Yes.” ADS executes the command — no tap needed.

This isn’t just accessibility as we know it — this is Agentic Accessibility:

Where systems learn, anticipate, and respond to individual ability and context in real time. Where assistive technology evolves into collaborative design intelligence.

⚠️ Disclaimer: The features described here represent a forward-facing conceptual model intended to showcase the vision and potential of ADS beyond version 1.0. Implementation feasibility, privacy constraints, and hardware dependencies must be carefully evaluated in real-world applications.

10.1 Accessibility & Compliance Built-In

Picture this: A digital experience that brings seamless, adaptive continuity to every user — those with visible or invisible impairments, temporary limitations, or simply varying preferences. In a world where design is often constrained to static principles, Agentic Design Systems (ADS) break free — enabling interfaces that learn, respond, and evolve based on real-time user behavior.

With ADS, accessibility is no longer an afterthought or toggle switch — it is a core design property. One that emerges organically, as atomic components intelligently decouple and reconfigure to meet the unique needs of each individual.

Imagine a system that:

- Observes if a user struggles with tap accuracy or low contrast

- Reacts by decoupling dense clusters, enlarging targets, or simplifying layouts

- Adjusts font size, color balance, or voice assistance using device-level cues like camera, ambient light, or touch velocity

This is the power of organic building blocks in ADS. Unlike traditional atomic design where components are statically combined, ADS allows every component within the atomic cluster to collaborate or isolate contextually, forming new design experiences on demand.

For example:

- A label and form field can dynamically spread apart for better readability

- A button can gain voice feedback or grow in size if the user hesitates

- A visual cluster can shift into a linear audio-driven interface when the system detects visual fatigue

And all this can happen without a designer or developer manually intervening.

10.2 Why It Matters

This isn’t theoretical. Studies show that adaptive interfaces — powered by machine learning and real-time signals — can significantly:

- Reduce task abandonment among motor and visual impaired users

- Improve compliance with WCAG A, AA, and AAA standards

- Decrease time-to-complete tasks by up to 35% when cognitive load is minimized

ADS doesn’t just support accessibility — it personalizes it, embedding Section 508 and WCAG compliance into the fabric of interaction itself.

In essence, accessibility becomes fluid — not a static checklist. It is behavioral, contextual, and continuous, making every user feel seen, supported, and in control.

10.3 ADS Vision vs. WCAG Principles

1. Perceivable

“Information and user interface components must be presentable to users in ways they can perceive.”

ADS model:

- Automatically increases contrast or font size based on user behavior

- Supports dynamic visual/audio modes via sensors

2. Operable

“User interface components and navigation must be operable.”

ADS model:

- Dynamically enlarges touch zones if motor issues are detected

- Adds voice navigation if visual hesitation or stress is sensed

3. Understandable

“Information and the operation of user interface must be understandable.”

ADS personalization:

- Simplifies interface when cognitive strain is sensed

- Adapts layouts to reflect user familiarity

4. Robust

“Content must be robust enough to be interpreted reliably by a wide variety of user agents, including assistive technologies.”

ADS layer:

- Builds on top of accessible code structures

- Doesn’t interfere with screen readers or ARIA roles — it complements them

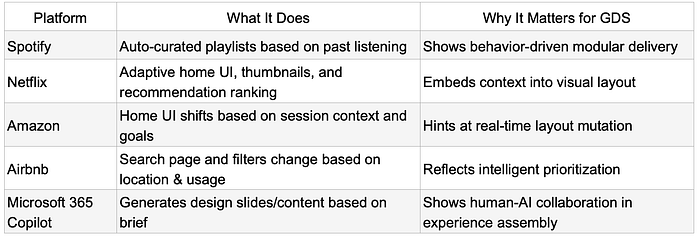

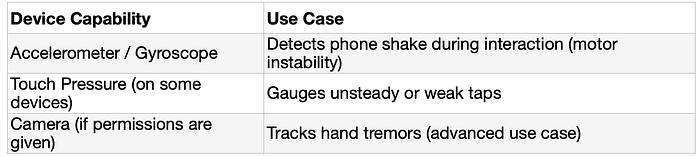

10.4 Detection Triggers

- Behavioral Indicators

These behaviors can be captured using simple event listeners and mobile device sensors.

2. Device Signal Integration

ADS Response (What It Does)

Once triggered, ADS can:

- Increase padding and spacing around buttons or links

- Expand tap zones without changing visual layout (e.g., via invisible hit area CSS/JS expansion)

- Elevate actionable elements to more reachable zones (bottom thumb area)

- Simplify or linearize navigation, reducing swipe or pinch interactions

- Offer audio/voice options if repeated struggle is detected

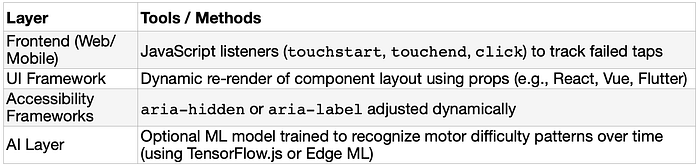

10.5 Technologies to Implement This

Example in React Native (Conceptual)

if (userInteractionPattern.showsMotorIssue()) {

setTouchableProps({

hitSlop: { top: 15, bottom: 15, left: 20, right: 20 },

accessibilityRole: "button",

});

}

if (userInteractionPattern.showsMotorIssue()) {

setTouchableProps({

hitSlop: { top: 15, bottom: 15, left: 20, right: 20 },

accessibilityRole: "button",

});

}

Or in web CSS:

.button {

padding: 8px 12px;

}

.button.large-target {

padding: 16px 24px; /* Enlarged if trigger met */

}

In Summary

ADS can enlarge touch zones dynamically by:

- Listening to real behavior patterns

- Using built-in device signals

- Adapting components on the fly

- Ensuring accessibility and consent are preserved

11. A Unified, Intelligent Living Environment

Imagine a home that doesn’t just respond — it learns, orchestrates, and adapts. With ADS, the smart home evolves into a context-aware, behavior-driven ecosystem. Intelligence is embedded within the environment — not just devices — allowing systems to coordinate seamlessly and interfaces to shift organically.

At the core is a centralized monitoring hub, which:

- Reorganizes UI elements based on user patterns

- Elevates high-priority components dynamically

- Coordinates all systems — HVAC, air purification, lighting, and security — as a cohesive whole

11.1 Adaptive Scenarios Driven by ADS

- Proximity-aware stove safely shuts down when the user steps away

- Air purifier compares indoor and outdoor AQI to regulate airflow

- Blinds and HVAC systems coordinate to maintain comfort and energy efficiency

- Security system arms or disarms based on presence and facial recognition

- Appliances manage themselves, reporting health and usage needs contextually

- Ambient voice agent schedules meetings, reminders, and tasks — screen-free

11.2 ADS Smart Home: What Makes It Truly Agentic

Today’s “Smart” Homes

ADS-Driven Homes

Static dashboards with preset widgets

UI that reconfigures based on usage and time

Device automations in silos

Multi-device orchestration as a learning ecosystem

Voice assistants tethered to hardware

Omnipresent ambient agent across space, not devices

AQI data shown to user

AQI intelligence that acts, compares, and optimizes

Manual customization required

Self-adapting interfaces, personalized over time

ADS transforms the smart home from connected to truly intelligent — not just reactive, but responsive to life.

11.3 Local Control & Privacy by Design

Unlike traditional systems, ADS prioritizes on-device learning and zero-cloud dependency. It only connects to the external world if explicitly permitted. Personalization happens at home, safeguarding user intent and behavior.

11.4 The Final Word: Human First, Always

No matter how much machines learn, humans will always have the edge.

While the system can sense, predict, and adapt — it is always subject to human intent. At any time, the user can override its behavior, instruct it to act differently, or silence it entirely.

ADS is not a replacement for human judgment — it is an intelligent reflection of it, built to serve, not to assume.

Current examples of partial ADS behavior:

Spotify: Uses behavioral clustering and content affinity to generate dynamic playlists tailored to taste, mood, or time of day — showcasing modular and adaptive experience rendering.

Netflix: Personalizes movie carousels and thumbnail art based on past interactions. Although UI elements remain static, the hierarchy, emphasis, and navigation journey shift per user.

Amazon: Reorders widgets and cards on its homepage based on recent browsing, purchases, and session goals. This hints at dynamic prioritization that ADS would scale deeper.

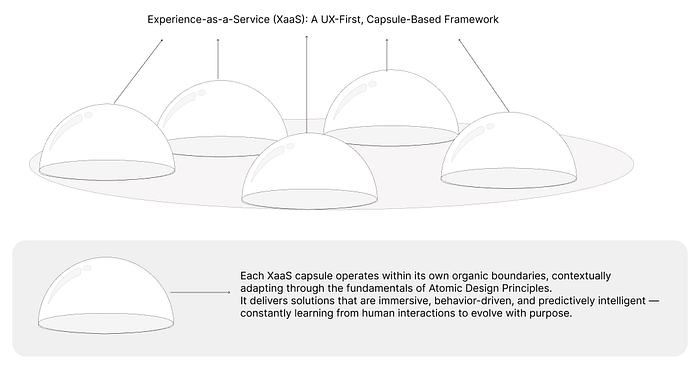

12. Experience-as-a-Service (XaaS): A UX-First, Capsule-Based Framework

As user expectations evolve beyond static interfaces, traditional design models must also expand to meet the demands of adaptive, system-level experiences. This is where Experience-as-a-Service (XaaS) emerges — a framework that places UX at the center of a distributed, modular, and intelligent ecosystem. It goes beyond delivering features; it delivers responsive, composable experiences that walk alongside users in real time.

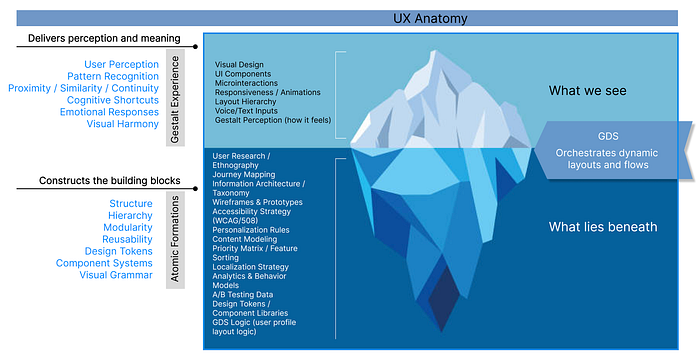

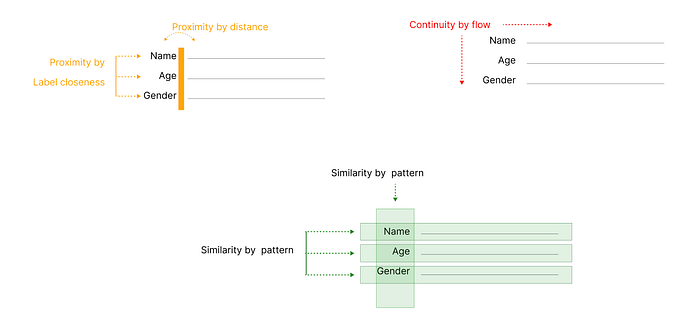

At the heart of this model lies the Capsule — a context-aware, modular unit of experience that blends UI, logic, content, and adaptive behavior into a self-contained, interoperable service. Capsules are powered by a Global Design System (ADS) and structured using an expanded Atomic Design methodology, while also incorporating Gestalt principles to ensure that the way interfaces are perceived is as intentional as the way they are constructed. This dual approach ensures that every experience is both systematically composed and intuitively understood.

Let’s explore two fundamental design principles that form the backbone of all user experience thinking.

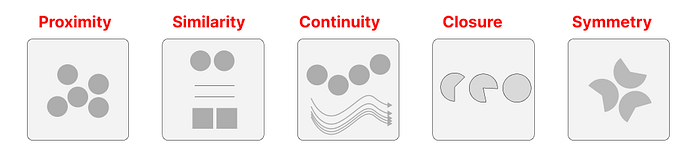

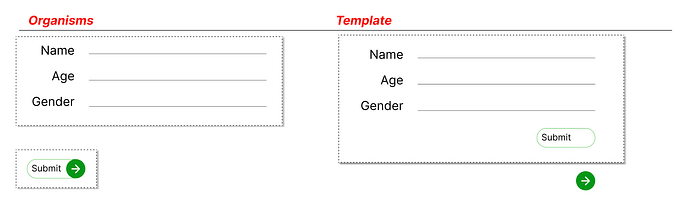

1. Gestalt principles

These are the forces that shape what lies above the water — the part of design users immediately see, connect with, and empathize with.

Gestalt principles define how humans and machines communicate through shared mental models. They orchestrate the schematic, immersive formations that make interfaces feel coherent, familiar, and intuitive for diverse user groups.

Below are the 5 objectives Gestalt Principles.

Proximity: Objects close together are perceived as a group

Similarity: Similar shapes/colors creating a perceived connection

Continuity: Flows and sequences perceived as continuous paths

Closure: Forms resolved by perception

Symmetry: Mirror patterns perceived as unified shapes

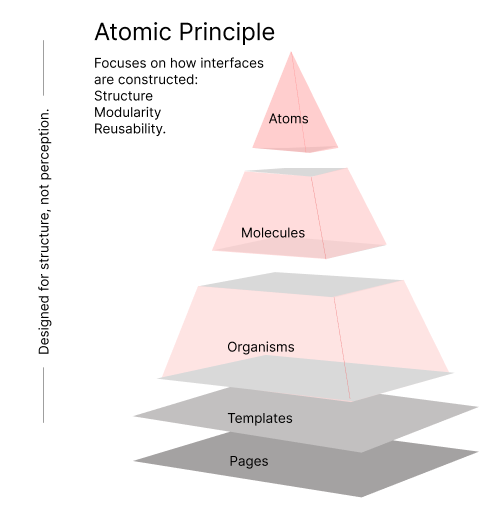

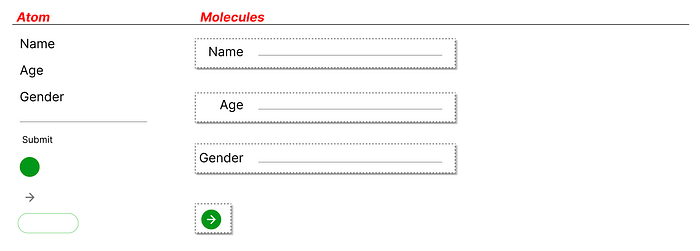

2. Atomic Design principles

Atomic Design defines the foundational building blocks of any digital experience. From the smallest elements — Atoms — to complete Pages, this methodology creates a systematic, scalable approach to interface development.

Atoms form the basic UI components, such as buttons, labels, and icons. These atoms combine into Molecules, which evolve into Organisms, providing more complex and functional sections. Templates define the underlying structure, while Pages deliver the final, context-specific experiences.

This framework enables reusability, stability, and alignment with brand guidelines. It empowers teams to design enterprise-level applications efficiently, ensuring consistency and reducing fragmentation across products.

“The success of a design system doesn’t lie in how consistent it is across screens — it lies in how consistent it feels across minds.”

3. How Gestalt and Atomic Design principles work together in building an effective design system and experience.

Each XaaS capsule operates within its own organic boundary — a self-contained experience unit that harmonizes Atomic structure with Gestalt perception. Internally, it is constructed using Atomic principles of modularity, logic, and reusability. Externally, it communicates through Gestalt principles — creating patterns, evoking meaning, and fostering intuitive interactions. This dual ecosystem enables each capsule to adapt in real time, continuously evolving from human input, context, and perception — delivering immersive, intelligent, and purpose-driven experiences.

-

Press enter or click to view image in full size

Experience-as-a-Service (XaaS): A UX-First, Capsule-Based Framework — ADS

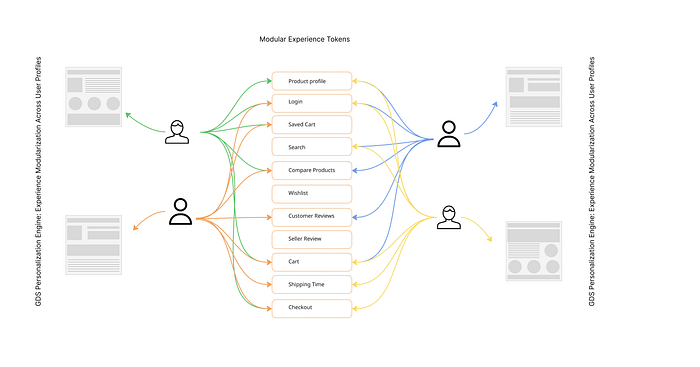

13. Agentic Design Systems (ADS) in E-Commerce: Personalizing at Scale

In the modern digital marketplace, platforms like Amazon, Walmart, and eBay dominate user engagement. But as users shift between platforms, they unconsciously bring with them expectations of consistency — not just in pricing or features, but in how fluid, familiar, and intuitive the process feels.

Agentic Design Systems (ADS) offer a bold yet practical solution: a modular, user-centric framework that retains and adapts the user’s preferred interaction model across platforms — all while respecting brand identity and UI differences.

ADS v1.0: Modular Experience Framework (Present)

ADS v1.0 is grounded in the modularity of Atomic Design and the perception-first principles of Gestalt, enabling interfaces to adapt around behavior rather than enforce layout consistency. It emphasizes:

- Personalization through pattern recognition: Frequently used features like Search, Cart, Checkout are elevated based on user behavior.

- Non-intrusive design shifts: Features not frequently used are still accessible — just repositioned or revealed via navigational or taxonomy changes.

- Behavior-first UI rendering: Interfaces gently morph to align with user comfort, not reinvent the wheel with every interaction.

This version doesn’t aim to change every site into a new visual language — instead, it adapts the existing design to better fit each user’s rhythm and routine.

13.1 Imagine: A UX Passport

Think of it as a UX Passport — a behavioral profile that stores your most intuitive journeys (say, Amazon’s checkout flow) and gently projects that preference onto other platforms, wherever compatible features exist.

Rather than redesigning for aesthetics, ADS reorganizes for cognitive and behavioral alignment — making every site feel like home, without diluting the brand.

14. ADS v2.0: A Vision Toward Design Singularity (Future)

“This section is a speculative projection of where Agentic Design Systems could evolve in the coming decade. It does not represent current best practices, but outlines a framework for a user-first experience singularity — where interface logic is governed not by brand standards, but by behavior patterns encoded in a universal UX passport.”

ADS v2.0 imagines a world where UX Global Tokens (e.g., ux:filter, ux:sort, ux:checkout) define how a platform responds to the user — not visually, but experientially.

Here’s how it scales:

- A user shops on Amazon → ADS learns their preferred behavior.

- The user visits Walmart → ADS recognizes similar features via tokens.

- The interface on Walmart adapts structurally to reflect the user’s Amazon flow — if supported.

- Over time, users move between platforms with zero friction — their interaction logic is carried forward, like a behavioral fingerprint.

This is the early vision of Design Singularity — where experience becomes portable, predictable, and personal — without needing to be taught or learned again.

The broader vision of interface portability can already be seen in how Apple’s ecosystem remembers preferences across devices — like auto-brightness, keyboard layouts, or Siri personalization. ADS aims to elevate this concept to interface behavior itself, not just surface settings.

“Every user touches the same platform — but leaves with a layout designed around them. ADS acts like a conductor: the notes (components) stay the same, but the composition changes per listener.”

15. Today vs ADS: Reimagining E-Commerce through Agentic Design

In today’s digital commerce, interfaces are designed with a “one-for-all” approach — structured by best practices, governed by brand design systems, and personalized at a surface level (like recommended products). While they may look refined, the underlying experience remains rigid:

Advantages of ADS in E-Commerce

- Frictionless Adaptation: Users don’t need to “learn” a new layout — ADS remembers and adapts interfaces around them.

- Cognitive Ease: Reduces mental load by promoting muscle-memory-driven interaction (e.g., cart always shows where they expect it).

- Behavioral Scalability: Learns from user flows and evolves over time, instead of relying on session-based data.

- Micro-UX Optimizations: Individual micro-interactions (like wishlist vs compare) are tuned based on frequency and attention.

- Contextual Clarity: Surfaces only what matters for that moment, hiding unused complexity until needed.

- Accessibility-Aware by Design: Interfaces adapt to meet WCAG/508 based on actual interaction patterns, not broad assumptions.

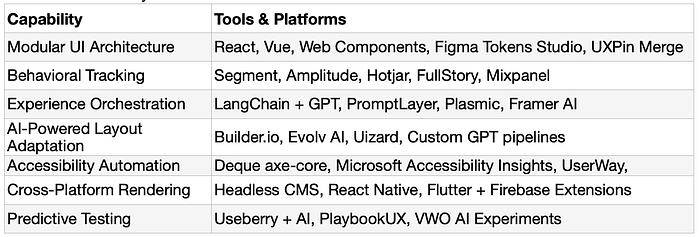

16. Tools & Tech Stack to Make ADS v1.0 Real

While ADS is a new methodology, its foundation can be built using existing tools — if orchestrated smartly:

17. What Makes ADS Different

ADS doesn’t just make design more efficient — it redefines the foundation of how experiences are built and evolve:

- From structure-first to experience-first

- From persona-based to behavior-defined

- From designer-owned to system-orchestrated and user-guided

- From static templates to living, modular journeys

18. Why ADS Matters for the Future

Designing at scale has always demanded trade-offs: speed versus quality, personalization versus consistency, innovation versus accessibility. Agentic Design Systems (ADS) offer a promising path forward — reducing manual bottlenecks while amplifying contextual relevance.

In today’s hyper-dynamic landscape, user experience extends far beyond usability. It now requires on-demand scalability, sustainability, and intelligent responsiveness. As algorithms grow more complex and solutions more distributed, the need for systems that adapt fluidly — while consuming fewer human and computational resources — becomes paramount.

While established design systems, UX processes, and design thinking frameworks provide structured foundations, they often struggle to keep pace with the fluidity of evolving user expectations. ADS emerges to help close this gap — automating essential tasks, personalizing experiences at scale, and empowering designers to focus on thoughtful curation rather than repetitive construction.

Modern users no longer settle for merely functional interfaces. They expect immersive, intuitive, and emotionally resonant journeys — interfaces that adapt to them, not the other way around. ADS aspires to meet these expectations by enabling design flows that evolve continuously, without bloating the system or overwhelming the individual.

ADS is not confined to the realm of software development. The principles of design thinking and modular systems can establish their footprint across every domain where user experience plays a role. Consider, for example, how ADS could enhance industries as varied as healthcare, finance, education, and smart home ecosystems. Exploring these domains highlights the potential breadth and effectiveness of this approach.

18.1 Atomic Principles Then and Tomorrow

“While the foundational tenets of Atomic Design remain strong, there is a growing need to scale its theory of relativity. Design constructs must now adapt not only by structure, but also by contextual needs and on-demand user behavior.” Static atoms, molecules, and organisms can no longer thrive in isolation when expectations are dynamic, multidimensional, and personalized.

Agentic Design Systems (ADS) build on these foundations by blending Atomic structure with Gestalt perception — enabling design systems not just to construct but to sense, adapt, and re-stitch experiences dynamically based on real-time input.

This convergence yields a system that goes beyond delivering components. Instead, it orchestrates formations that continuously adjust to individual contexts while preserving the coherence and scalability of the overall design framework.

18.2 Design Singularity: The North Star of ADS.

One intelligent system can craft experiences unique to each user, evolving autonomously without constant manual oversight.

The ultimate goal of Agentic Design Systems is to create a world where interfaces don’t just adapt across screens — but across platforms, products, and even industries — guided entirely by the user’s behavioral fingerprint. This isn’t about homogenization or erasing brand identities. It’s about enabling radical individualization without losing the signature of a business or the integrity of its model.

This vision — already outlined in our ADS v2.0 direction — champions the modularization of experience through universal UX tokens and intelligent decoupling of interface components. In practice, this means that a system can learn not only what users prefer, but how they navigate, and reconstruct flows to match those behaviors in real time.

Imagine decoupling components organically — reshaping layouts, gestures, and journeys based on personal history — so every interaction feels familiar yet brand-authentic. The intent is to scale the surface experience, not the underlying complexity.

Design singularity is not just a technological ambition. It is an ethical, strategic, and cultural frontier. It asks us to envision a world where users no longer adapt to systems. Systems adapt to them — continuously, invisibly, and respectfully.

18.3 Human-AI collaboration in UX: designers become curators.

Designers are evolving from manually crafting every screen to orchestrating, supervising, and fine-tuning AI-generated flows. In the past, designers defined experiences end-to-end — every page, every state. With Agentic Design Systems, the role shifts to a more strategic form of design engineering.

Here, we no longer design static pages in isolation. We design the components, the rules, and the relationships that allow experiences to assemble dynamically. ADS elevates this approach by giving Gestalt and Atomic principles more importance than ever — because the success of AI-generated experiences relies on the integrity and adaptability of these foundational building blocks.

18.4 Ethical design — adapting for inclusivity, not just efficiency.

“One world, one design” may sound appealing, but in the context of ADS, it is an ironic oversimplification. True inclusivity isn’t about forcing one universal solution on everyone. It’s about ensuring that every adaptation — no matter how personalized — still respects core principles of accessibility, privacy, and equity.

Agentic Design Systems must be held to the highest standards of ethical design. That means:

- Consistently meeting WCAG requirements across all adaptive variants.

- Protecting user privacy and data security as systems learn behavioral patterns.

- Recognizing vulnerable groups — such as minors or users with cognitive challenges — and adjusting content, interactions, and visual density accordingly.

In ADS, inclusivity becomes a living mandate: a design that understands the user without exploiting them, adapting dynamically while never compromising dignity or trust.

19. Risks and Ethical Considerations

While ADS unlocks unprecedented personalization, it raises essential considerations:

- Privacy: Continuous behavioral tracking requires explicit consent, clear opt-outs, and adherence to data minimization principles (see GDPR Article 5).

- Bias: AI models may inadvertently amplify biases present in historical training data, requiring bias audits and corrective tuning (refer to ISO 9241–210).

- Overpersonalization: Excessive adaptation could create filter bubbles or confusion across shared devices or public terminals.

- Accessibility Drift: Automated adaptations might unintentionally compromise clarity or violate WCAG guidelines if not monitored.

20. Protecting & Crediting Your ADS Methodology

- Agentic Design Systems (ADS) is an original methodology authored and documented by Kailas Pramodh. This framework is protected under applicable copyright and intellectual property laws. Reuse, reproduction, or adaptation of this work — whether in part or in full — is permitted only with explicit credit to the author. Trademark registration is under consideration, and this document serves as a public timestamp of authorship and ownership.

21. Optional References Section (Conclusion)

Suggested References and Frameworks

- ISO 9241–210: Human-Centered Design for Interactive Systems

- WCAG 2.2 Guidelines: Accessibility standards for digital products

- GDPR: General Data Protection Regulation for privacy and data ethics

- The Nielsen Norman Group: Usability heuristics and UX research

- Material Design (Google): Component-based design frameworks

- Carbon Design System (IBM): Enterprise-scale design systems

- Adobe Sensei: AI-powered design and content intelligence

- Evolv AI: Continuous optimization of digital experiences

*********** END ************